###Hue安装配置

Hue是一个开源的Apache Hadoop UI系统,最早是由Cloudera Desktop演化而来,由Cloudera贡献给开源社区,它是基于Python Web框架Django实现的。通过使用Hue我们可以在浏览器端的Web控制台上与Hadoop集群进行交互来分析处理数据,例如操作HDFS上的数据,运行MapReduce Job等

Hue所支持的功能特性集合:

- 默认基于轻量级sqlite数据库管理会话数据,用户认证和授权,可以自定义为MySQL、Postgresql,以及Oracle

- 基于文件浏览器(File Browser)访问HDFS

- 基于Hive编辑器来开发和运行Hive查询

- 支持基于Solr进行搜索的应用,并提供可视化的数据视图,以及仪表板(Dashboard)

- 支持基于Impala的应用进行交互式查询

- 支持Spark编辑器和仪表板(Dashboard)

- 支持Pig编辑器,并能够提交脚本任务

- 支持Oozie编辑器,可以通过仪表板提交和监控Workflow、Coordinator和Bundle

- 支持HBase浏览器,能够可视化数据、查询数据、修改HBase表

- 支持Metastore浏览器,可以访问Hive的元数据,以及HCatalog

- 支持Job浏览器,能够访问MapReduce Job(MR1/MR2-YARN)

- 支持Job设计器,能够创建MapReduce/Streaming/Java Job

- 支持Sqoop 2编辑器和仪表板(Dashboard)

- 支持ZooKeeper浏览器和编辑器

- 支持MySql、PostGresql、Sqlite和Oracle数据库查询编辑器

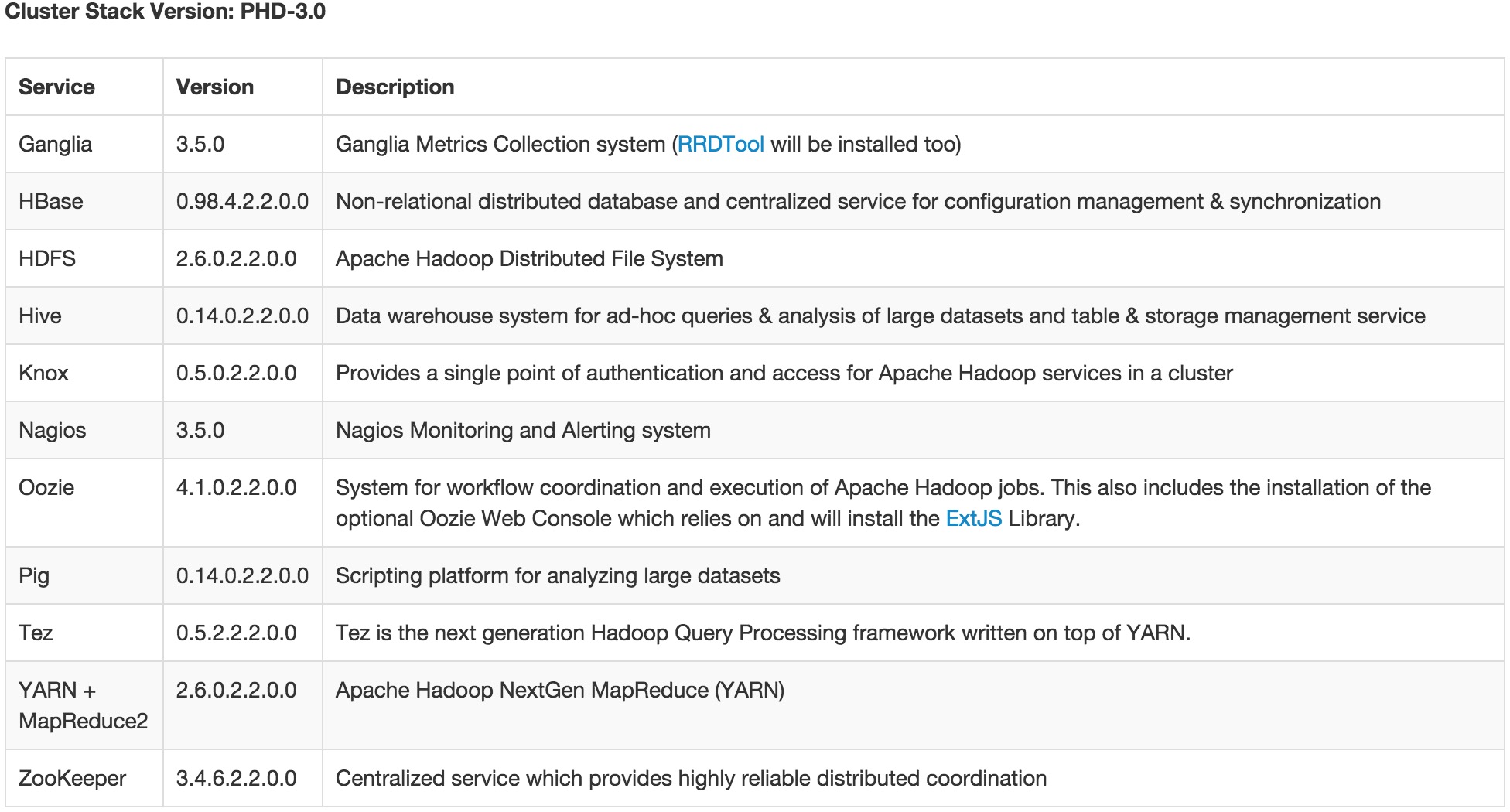

####已有的环境说明 CentOS release 6.6 (Final) phd3.0 client

####环境准备

- 安装maven

1

2

3

wget http://mirror.bit.edu.cn/apache/maven/maven-3/3.3.3/binaries/apache-maven-3.3.3-bin.tar.gz

tar -zxvf apache-maven-3.3.3-bin.tar.gz

cp -r apache-maven-3.3.3 /usr/local/

编辑1

/etc/profile

1

2

3

export M2_HOME=/usr/local/apache-maven-3.3.3

export JAVA_HOME=/usr/java/jdk1.7.0_80

export PATH=$PATH:$JAVA_HOME/bin:$M2_HOME/bin

- 下载hue的所有rpm包

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

wget https://www.dropbox.com/s/v7o5mxybvuhy4l4/hue-all-3.7.1-1.el6.x86_64.zip

[root@phd3-c hue-all]# ll

总用量 44292

-rwxr-xr-x 1 root root 1936 10月 16 11:28 hue-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 273664 10月 16 11:28 hue-beeswax-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 39078380 10月 16 11:28 hue-common-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 1264868 10月 16 11:28 hue-doc-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 474732 10月 16 11:28 hue-hbase-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 36320 10月 16 11:28 hue-impala-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 355576 10月 16 11:28 hue-pig-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 41228 10月 16 11:28 hue-rdbms-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 3580276 10月 16 11:28 hue-search-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 62324 10月 16 11:28 hue-security-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 4220 10月 16 11:28 hue-server-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 45964 10月 16 11:28 hue-spark-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 67244 10月 16 11:28 hue-sqoop-3.7.1-1.el6.x86_64.rpm

-rwxr-xr-x 1 root root 40724 10月 16 11:28 hue-zookeeper-3.7.1-1.el6.x86_64.rpm

- 安装依赖包

1

yum -y install cyrus-sasl-gssapi cyrus-sasl-plain libxml2 libxslt zlib python sqlite python-psycopg2

1

2

3

4

5

6

7

8

9

sudo yum -y install ./hue-common-3.7.1-1.el6.x86_64.rpm

sudo yum -y install ./hue-server-3.7.1-1.el6.x86_64.rpm

sudo yum -y install ./hue-rdbms-3.7.1-1.el6.x86_64.rpm

sudo yum -y install ./hue-zookeeper-3.7.1-1.el6.x86_64.rpm

sudo yum -y install ./hue-pig-3.7.1-1.el6.x86_64.rpm

sudo yum -y install ./hue-hbase-3.7.1-1.el6.x86_64.rpm

sudo yum -y install ./hue-beeswax-3.7.1-1.el6.x86_64.rpm

sudo yum -y install ./hue-sqoop-3.7.1-1.el6.x86_64.rpm

sudo yum -y install ./hue-impala-3.7.1-1.el6.x86_64.rpm

####启动hue服务

1

/etc/init.d/hue start

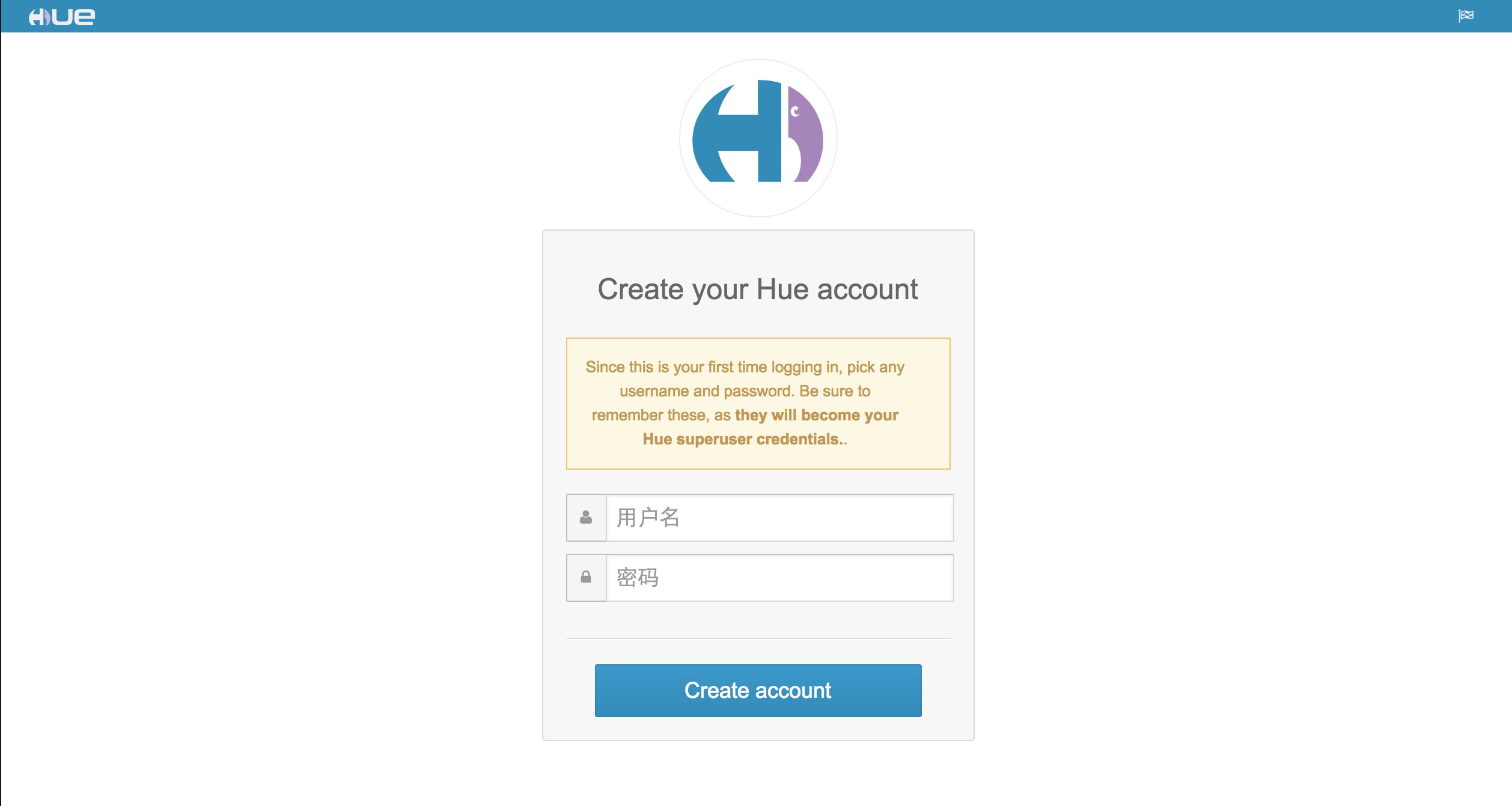

默认是8888端口访问

提示您先创建一个管理严账户

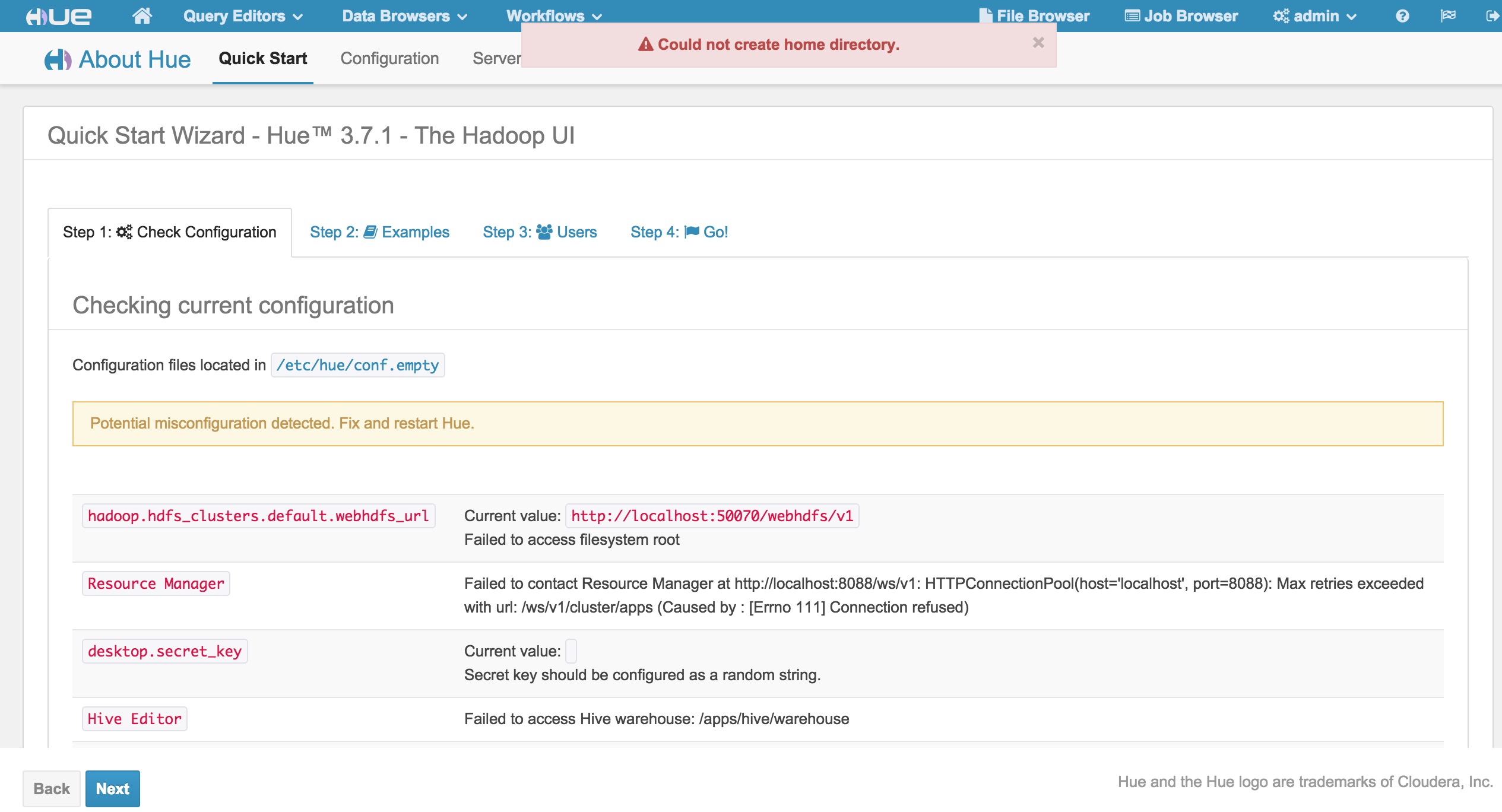

然后进入主界面,但是因为没有配置信息,所以界面提示要修改配置

提示您先创建一个管理严账户

然后进入主界面,但是因为没有配置信息,所以界面提示要修改配置

####配置hue-server

修改配置文件1

/etc/hue/conf/hue.ini

desktop的配置:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

[desktop]

# Set this to a random string, the longer the better.

# This is used for secure hashing in the session store.

secret_key=huangjie

# Webserver listens on this address and port

http_host=0.0.0.0

http_port=8888

# Time zone name

time_zone=Asia/Shanghai

# Comma separated list of apps to not load at server startup.

# e.g.: pig,zookeeper

# Bigtop does not bundle impala

app_blacklist=impala,indexer,pig

rdbms的配置:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

[librdbms]

# The RDBMS app can have any number of databases configured in the databases

# section. A database is known by its section name

# (IE sqlite, mysql, psql, and oracle in the list below).

[[databases]]

# mysql, oracle, or postgresql configuration.

## [[[mysql]]]

# Name to show in the UI.

nice_name="hue server db"

# For MySQL and PostgreSQL, name is the name of the database.

# For Oracle, Name is instance of the Oracle server. For express edition

# this is 'xe' by default.

name=hue

# Database backend to use. This can be:

# 1. mysql

# 2. postgresql

# 3. oracle

engine=mysql

# IP or hostname of the database to connect to.

host=10.2.29.70

# Port the database server is listening to. Defaults are:

# 1. MySQL: 3306

# 2. PostgreSQL: 5432

# 3. Oracle Express Edition: 1521

port=3306

# Username to authenticate with when connecting to the database.

user=hue

# Password matching the username to authenticate with when

# connecting to the database.

password=xxxxxx

hadoop配置部分:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

[hadoop]

# Configuration for HDFS NameNode

# ------------------------------------------------------------------------

[[hdfs_clusters]]

# HA support by using HttpFs

[[[default]]]

# Enter the filesystem uri

fs_defaultfs=hdfs://phd3-m1.xxb.cn:8020

# Use WebHdfs/HttpFs as the communication mechanism.

# Domain should be the NameNode or HttpFs host.

# Default port is 14000 for HttpFs.

webhdfs_url=http://phd3-m1.xxb.cn:50070/webhdfs/v1

# Configuration for YARN (MR2)

# ------------------------------------------------------------------------

[[yarn_clusters]]

[[[default]]]

# Enter the host on which you are running the ResourceManager

resourcemanager_host=phd3-m1.xxb.cn

# The port where the ResourceManager IPC listens on

resourcemanager_port=8030

# Whether to submit jobs to this cluster

submit_to=True

# Resource Manager logical name (required for HA)

## logical_name=

# Change this if your YARN cluster is Kerberos-secured

## security_enabled=false

# URL of the ResourceManager API

resourcemanager_api_url=http://phd3-m1.xxb.cn:8088

# URL of the ProxyServer API

## proxy_api_url=http://localhost:8088

# URL of the HistoryServer API

# history_server_api_url=http://phd3-m3.xxb.cn:19888

liboozie配置:

1

2

3

4

5

6

7

8

9

10

[liboozie]

# The URL where the Oozie service runs on. This is required in order for

# users to submit jobs. Empty value disables the config check.

oozie_url=http://phd3-m3.xxb.cn:11000/oozie

# Requires FQDN in oozie_url if enabled

## security_enabled=false

# Location on HDFS where the workflows/coordinator are deployed when submitted.

## remote_deployement_dir=/user/hue/oozie/deployments

beeswax配置(hive):

1

2

3

4

5

6

7

8

9

10

11

[beeswax]

# Host where HiveServer2 is running.

# If Kerberos security is enabled, use fully-qualified domain name (FQDN).

hive_server_host=phd3-m2.xxb.cn

# Port where HiveServer2 Thrift server runs on.

hive_server_port=10000

# Hive configuration directory, where hive-site.xml is located

hive_conf_dir=/etc/hive/conf

hbase配置:

1

2

3

4

[hbase]

# Comma-separated list of HBase Thrift servers for clusters in the format of '(name|host:port)'.

# Use full hostname with security.

hbase_clusters=(Cluster|phd3-m3.xxb.cn:9090)

zookeeper配置:

1

2

3

4

5

6

7

8

9

10

11

[zookeeper]

[[clusters]]

[[[default]]]

# Zookeeper ensemble. Comma separated list of Host/Port.

# e.g. localhost:2181,localhost:2182,localhost:2183

host_ports=phd3-m1.xxb.cn:2181,phd3-m2.xxb.cn,phd3-m3.xxb.cn

# The URL of the REST contrib service (required for znode browsing)

rest_url=http://phd3-m1.xxb.cn:9998

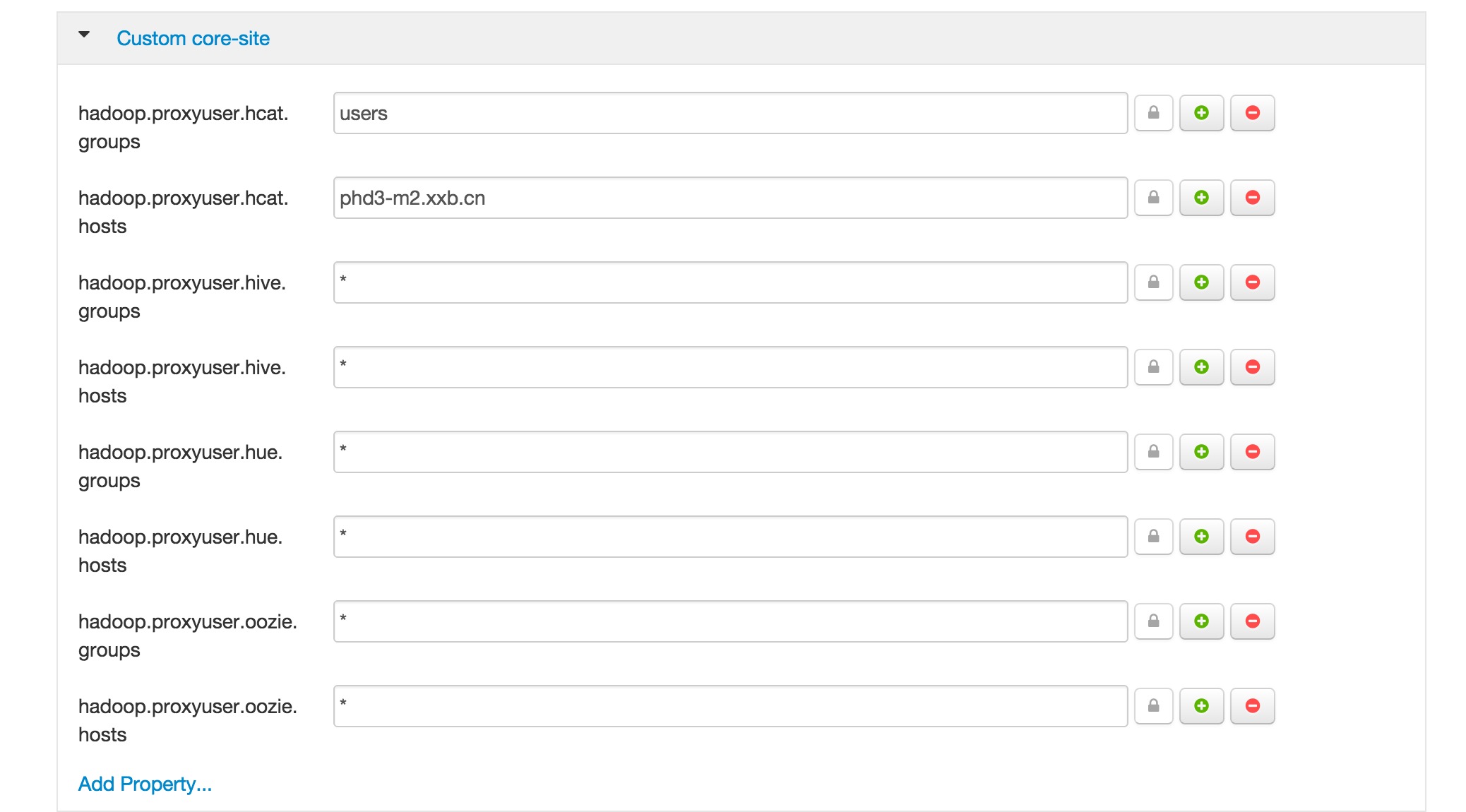

####hadoop需要修改的配置

- 通过Ambari修改hdfs配置,对应修改的文件是

1

core-site.xml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

<property>

<name>hadoop.proxyuser.hive.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hive.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.oozie.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.oozie.hosts</name>

<value>*</value>

</property>

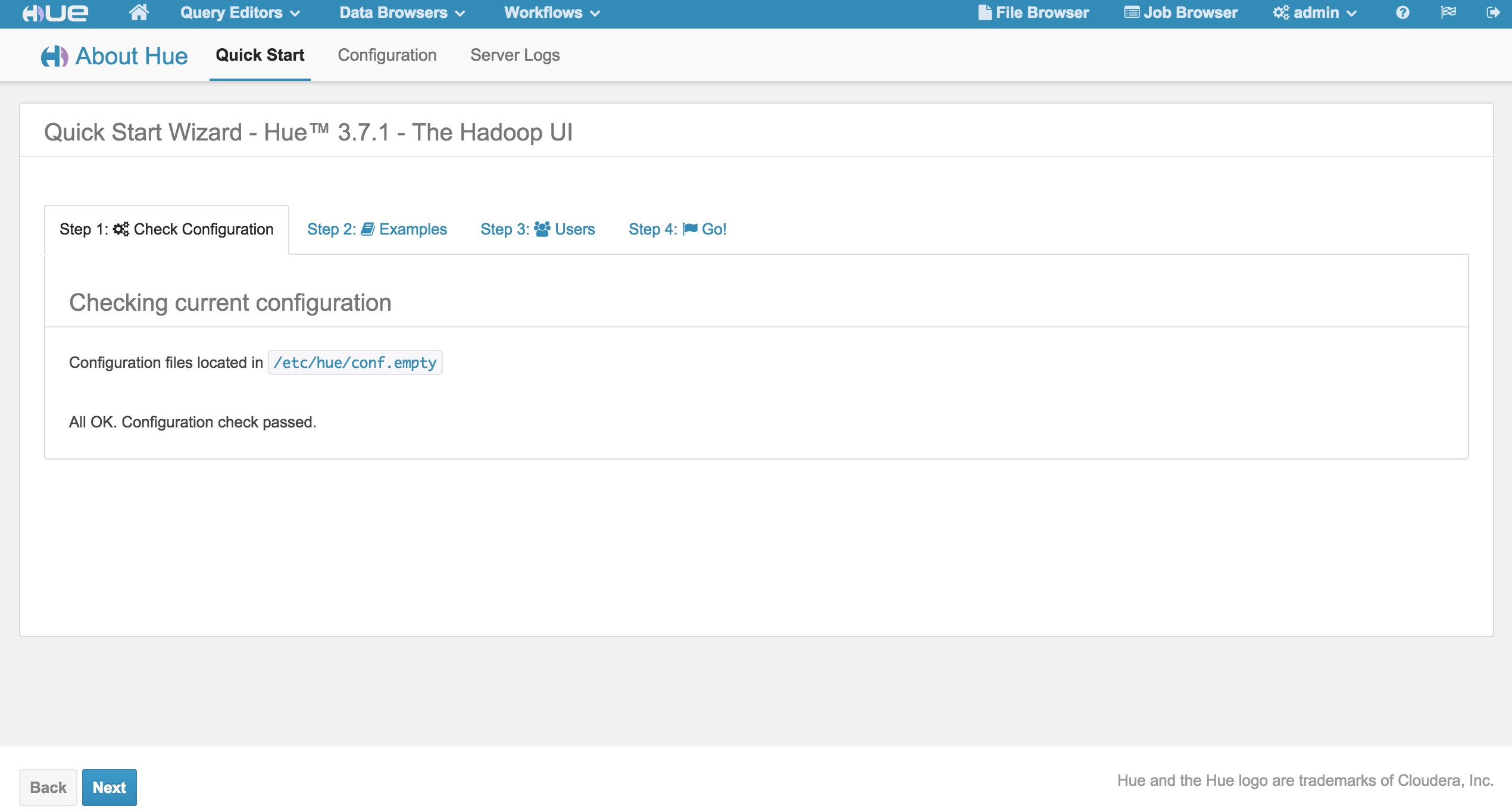

####配置完成后

- 重启hue-server,然后再次check configuration,看看是否还有报错信息

1

/etc/init.d/hue restart

- 如果没有别的异常,则会看到如下界面

-

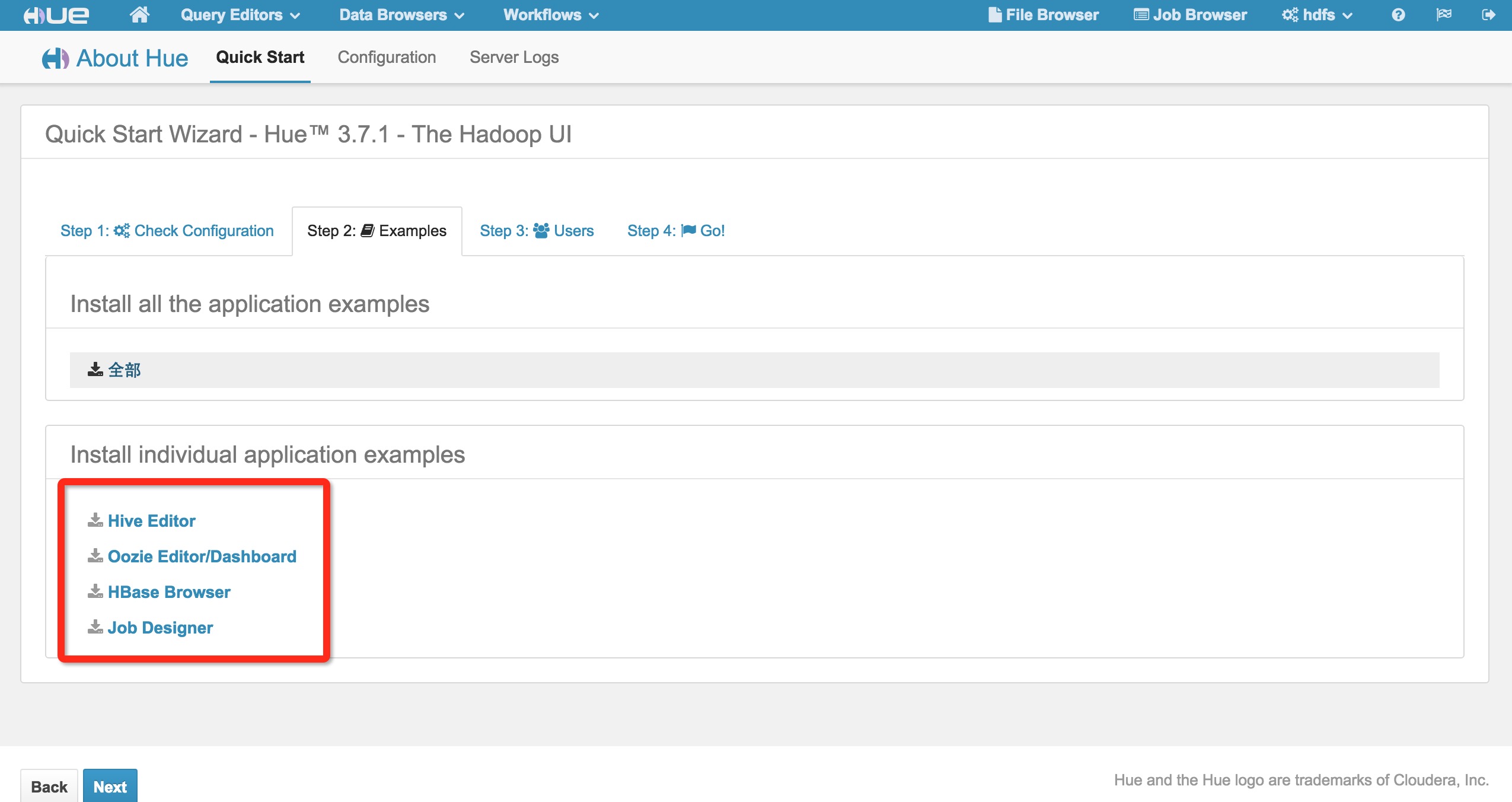

安装自带的example程序

-

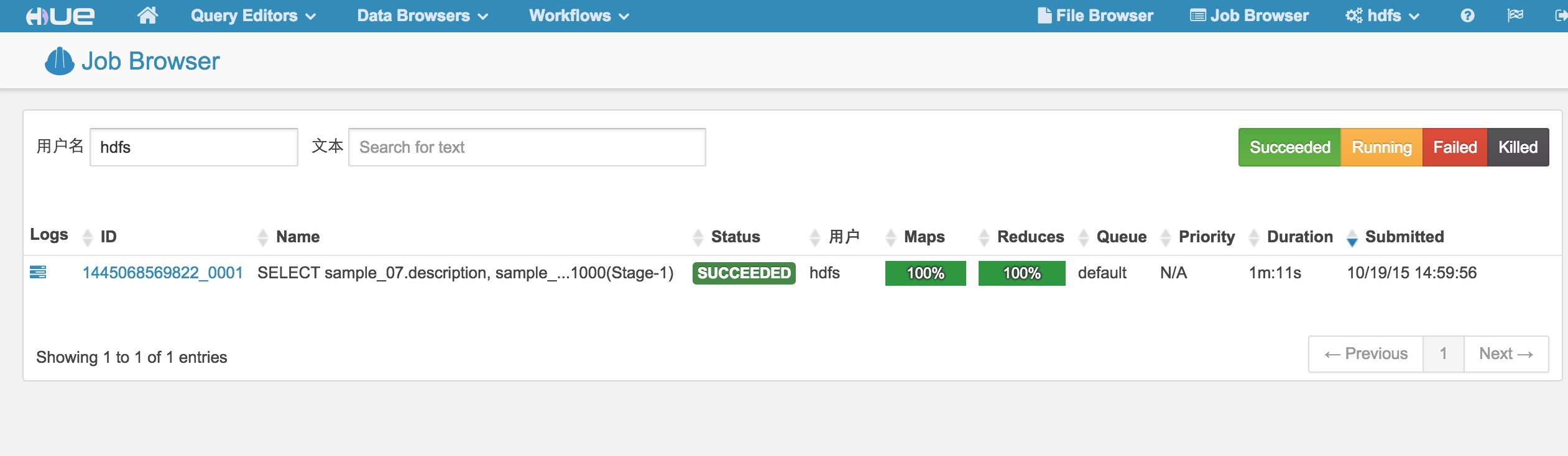

最后测试一下hive的job是否能够成功执行

####常见错误处理

- hue中创建hdfs用户,并授予管理员权限,否则有可能在安装hive-example的时候没有写hdfs文件系统的权限,

1

Permission denied: user=admin, access=WRITE, inode="/user":hdfs:hdfs:drwxr-xr-x

- 通过Ambari管理的hadoop,一定要通过ambari修改配置信息,否则会遇到

1

The Oozie server is not available

- 如果使用hbase的话,一定要在hbase服务器上,启动thrift server,

1

nohup /usr/bin/hbase thrift start &